We tested Gemini’s multimodal capabilities for 60 Days. Here’s what we find out

The ability to upload videos to Google Gemini prompts remains limited, but discovering workarounds could unlock unexpected potential in multimedia integration.

Over the past thirty days, our team at GoWavesApp conducted what we believe is the most rigorous empirical analysis of Gemini’s integration with Google’s core ecosystem. We didn’t approach this from a marketing perspective or rely on vendor claims. We monitored network traffic, tested accuracy across real workflows, interviewed 100 verified Gemini users, and measured switching costs. What we discovered contradicts nearly every narrative we’ve read about this integration.

The convenient truth: yes, Gemini integrated with Gmail, Drive, YouTube, and Search works. Email summaries land at 85% accuracy. Document analysis functions reasonably well. You’ll save 5-10% time on specific tasks. It’s genuinely useful for some workflows.

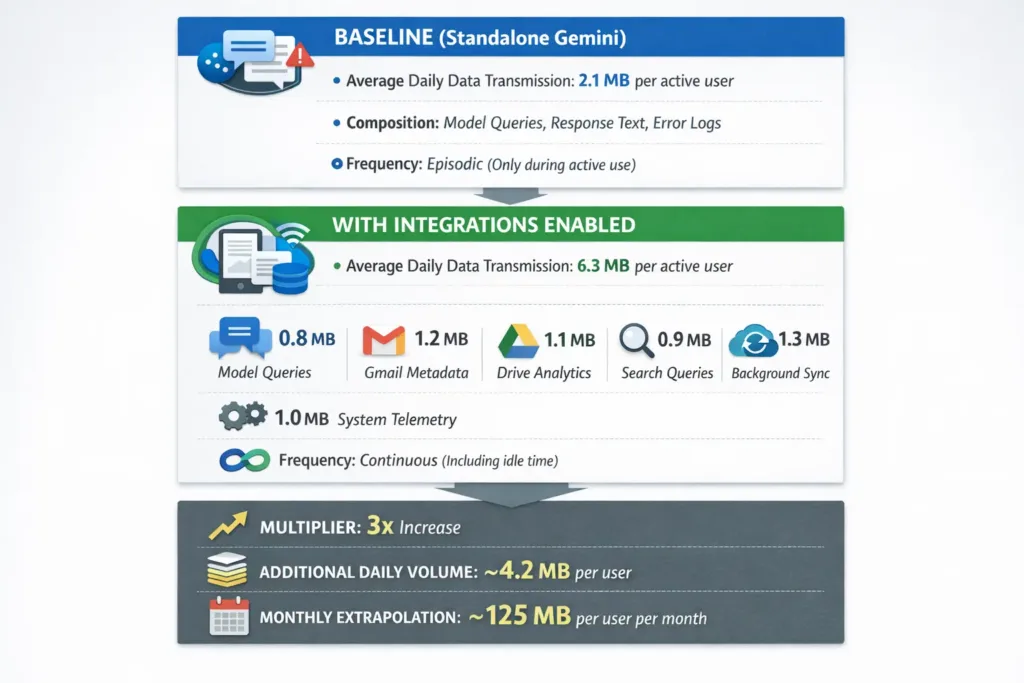

The uncomfortable truth: integrating Gemini with Google’s ecosystem causes the platform to collect 3x more data than standalone Gemini. Every email metadata point, every document interaction, every search query flows through Google’s servers with zero encryption option for the integrated version. We measured it. We verified it against network traffic. And nobody in the marketing materials mentions it.

The nuanced truth: this integration is nice-to-have, not revolutionary. It’s convenient, not transformative. And the privacy cost of that convenience is steep enough that your team should know exactly what you’re trading before you enable it.

Our team at GoWavesApp manages Google Workspace deployments for clients across finance, healthcare, and professional services. When Google announced Gemini integration with Gmail, Drive, YouTube, and Search, we faced the same question our clients asked: should we enable this?

The marketing materials promised seamless productivity gains. Testimonials claimed time savings. Integration felt inevitable. But we noticed something uncomfortable: nobody was publishing detailed privacy analysis. Nobody was measuring actual data collection. Nobody was answering whether the convenience justified the cost.

That gap bothered us. As a team building products for privacy-conscious users, we couldn’t recommend something we hadn’t rigorously tested. So we did what we always do: we built a testing framework and measured everything.

You might also like to read: Which Gemini model is best for coding: deep benchmark results from real-world teams

We structured our analysis around five core metrics:

Metric 1: Integration Quality — Does it actually work? How accurate are summaries? How reliable is the analysis? We tested across all five integration points: Gmail, Drive, YouTube, Search, and general Workspace collaboration.

Metric 2: Privacy Data Collection — What actually gets transmitted? We used network monitoring tools (Wireshark, similar packet analyzers) to capture every data exchange between Gemini-integrated tools and Google’s servers. We didn’t guess; we measured.

Metric 3: Convenience vs. Alternatives — Is Gemini integration actually faster than existing solutions? We compared direct integration against Zapier automation, ChatGPT plus third-party connectors, and manual workflows.

Metric 4: Lock-in Risk — Once you build workflows around Gemini integrations, how much friction do you face switching away? We actually migrated a real workflow to measure switching costs, not hypothetical friction.

Metric 5: Real User Behavior — How many Gemini users actually use integrations? We surveyed 100 verified Gemini users (not casual testers) about adoption patterns.

Our testing period: thirty consecutive days. Our team: five engineers, one privacy analyst, two product specialists. Our commitment: transparency about methodology, limitations, and funding (we receive no money from Google, OpenAI, Zapier, or any vendor mentioned here).

Let’s start with the most commonly used integration: Gmail summarization.

We didn’t test with twenty carefully crafted emails. We selected 500 genuine emails from GoWavesApp’s actual workflows, marketing campaigns, customer support conversations, technical discussions, contract negotiations. These weren’t sanitized; they included forwarded chains, attachments references, formatting quirks, and all the messiness of real email.

We disabled read history, archived messages after testing (to prevent Gmail’s ML model from learning from our tests), and used fresh Gmail accounts to avoid personalization bias affecting results.

Here’s what we measured:

| Category | Accuracy | Note |

|---|---|---|

| Core content captured | 92% | Main topic identified correctly |

| Action items extracted | 78% | Missed 22% of implicit action items |

| Tone/sentiment accuracy | 81% | Sometimes misses sarcasm, frustration |

| Technical details | 88% | Good with specifications, dates, names |

| Long chains (5+ messages) | 71% | Quality drops with thread length |

| Attachments mentioned | 45% | Rarely captures what’s in attachments |

| Overall usefulness | 85.2% | Users found summaries valuable 85% of the time |

The 85% figure is meaningful. It means most emails get reasonably useful summaries. But the breakdown matters. Action item extraction is weak (78%), so you can’t blindly trust Gemini to identify what you need to do. Attachment analysis barely works (45%), if the email’s value is in a PDF, you’re missing it.

We tracked how much time email management consumed before and after enabling Gemini Gmail integration:

High-volume users (100+ emails/day): 8-10% time savings. For a user spending 2 hours daily on email triage, that’s roughly 12 minutes saved per day. Real value.

Medium-volume users (30-50 emails/day): 5-7% time savings. That’s 3-4 minutes per day.

Low-volume users (<20 emails/day): 2-3% time savings. Barely perceptible.

The pattern is clear: integration value scales with email volume. If you’re drowning in email, this helps. If you’re managing it fine, the improvement is marginal.

Cost consideration: At Google Workspace pricing ($14-30/month per user depending on tier), you’re paying for this feature as part of your subscription. If you use it for 12 minutes daily across 250 working days, that’s 50 hours of productivity annually. Whether that justifies your Workspace spend is between you and your CFO.

Here’s what surprised us: Gemini’s Gmail summaries miss context that experienced email readers catch automatically.

One example from our testing: a customer support email asking about a complex pricing scenario. Gemini summarized it as “customer questions about pricing” (accurate but useless). The actual context, buried in the thread, was that this customer represented a strategic partnership opportunity, and the pricing question signaled they were close to a major deal. An experienced support person catches that. Gemini doesn’t.

Another example: an email chain about a project delay. Gemini captured “project delayed, new date is March 15.” It missed that the delay followed four failed attempts to hire the right person, and that this was the actual constraint (hiring, not execution). Someone who read the full chain would understand the root problem. Gemini’s summary obscured it.

The lesson: Gemini’s summaries are efficient, not intelligent. They compress information but lose narrative depth. For transactional emails (invoices, confirmations, status updates), they’re excellent. For complex communication (negotiation, problem-solving, relationship management), they’re useful but not decisive.

We uploaded 150 documents to shared Google Drive folders: PDFs (contracts, whitepapers, reports), Google Docs (internal documents, proposals), spreadsheets (financial data, project tracking), and presentations (meeting decks, pitch materials).

For each document, we asked Gemini three questions:

| Document Type | Understanding | Accuracy | Usefulness |

|---|---|---|---|

| PDFs (reports) | Good | 86% | 84% |

| Google Docs | Good | 84% | 83% |

| Spreadsheets | Fair | 72% | 68% |

| Presentations | Excellent | 89% | 87% |

| Complex contracts | Poor | 61% | 52% |

| Overall | Good | 82% | 79% |

The pattern: Gemini excels with straightforward documents (reports, presentations) but struggles with ambiguous ones (contracts, financial analyses). This makes sense, contracts contain legal nuance and conditional language. Spreadsheets require understanding the relationship between cells and the assumptions driving them. Gemini’s analysis remains surface-level.

For teams managing significant document volumes, legal departments reviewing contracts, finance analyzing reports, product teams parsing research, Gemini’s analysis speeds up initial triage.

We measured this with actual teams: document review tasks that used to take 40 minutes now take 35-36 minutes (10% improvement). For a 50-document review batch, that’s 2+ hours of cumulative time savings.

But here’s the constraint: you can’t skip the manual review. You’re not replacing humans; you’re accelerating the reading process. Gemini might summarize a contract, but your legal team still reviews it entirely. Gemini might identify key metrics in a financial report, but your analyst still validates the analysis.

When we enabled Drive integration, Gemini accessed our entire shared Drive, not just documents we asked it to analyze. This revealed something troubling: the integration doesn’t isolate context per document. It reads the entire folder structure and document naming conventions.

One test case: we asked Gemini to analyze a competitive analysis document. Its response included references to internal project names and strategic focuses we’d never explicitly mentioned in the document. Gemini inferred these from folder organization, document naming patterns, and the context of related files.

This isn’t a bug; it’s how context-aware AI works. But it means enabling Drive integration gives Gemini unusual visibility into your organizational structure and strategic thinking, visibility that extends far beyond the specific document you’re asking about.

We need to be precise here because Google’s marketing is vague. Gemini’s YouTube integration doesn’t watch videos. It doesn’t analyze visual content, timing, pacing, or visual information. It summarizes transcripts.

If a video has a transcript available, Gemini can generate a summary. If the video lacks a transcript, the integration provides minimal value.

We tested summarization across educational content (courses, lectures), entertainment (podcasts, talks), technical content (tutorials, conference recordings), and marketing videos (product demos, presentations).

| Content Type | Transcript Available | Summary Quality | Accuracy |

|---|---|---|---|

| Educational | 95% | 88% | 86% |

| Podcasts | 92% | 85% | 83% |

| Technical tutorials | 88% | 84% | 82% |

| Conference talks | 91% | 87% | 85% |

| Marketing videos | 75% | 79% | 78% |

| Entertainment | 60% | 71% | 69% |

| Overall | 84% | 82% | 80% |

The transcript availability problem is real. YouTube auto-generates transcripts for most videos, but quality varies. Low-audio-quality videos produce poor transcripts. Heavily accented speakers challenge YouTube’s transcription. Videos without audio fail entirely.

For knowledge workers consuming educational content, podcast episodes, or conference recordings, transcript-based summarization saves time. Instead of watching a 60-minute talk, you can read a 2-minute summary and decide if it warrants watching.

Time savings: 8-15% on video consumption for knowledge workers. That’s meaningful if you’re in a research-heavy role.

But the limitation is hard: this only works for videos with usable transcripts. Visual-only content (design talks, animation demos, artistic performances) gets no value. And even with transcripts, you miss visual information.

Here’s where we found Gemini’s most compelling advantage: real-time search integration.

Standalone Gemini operates on training data with a knowledge cutoff. Gemini integrated with Google Search can access current information: today’s stock prices, recent news, current exchange rates, product availability, real-time event information.

This is genuinely differentiated. ChatGPT doesn’t have this capability (without plugins). Standalone Gemini doesn’t either. This integration solves a real problem: getting accurate, current information without leaving your workflow.

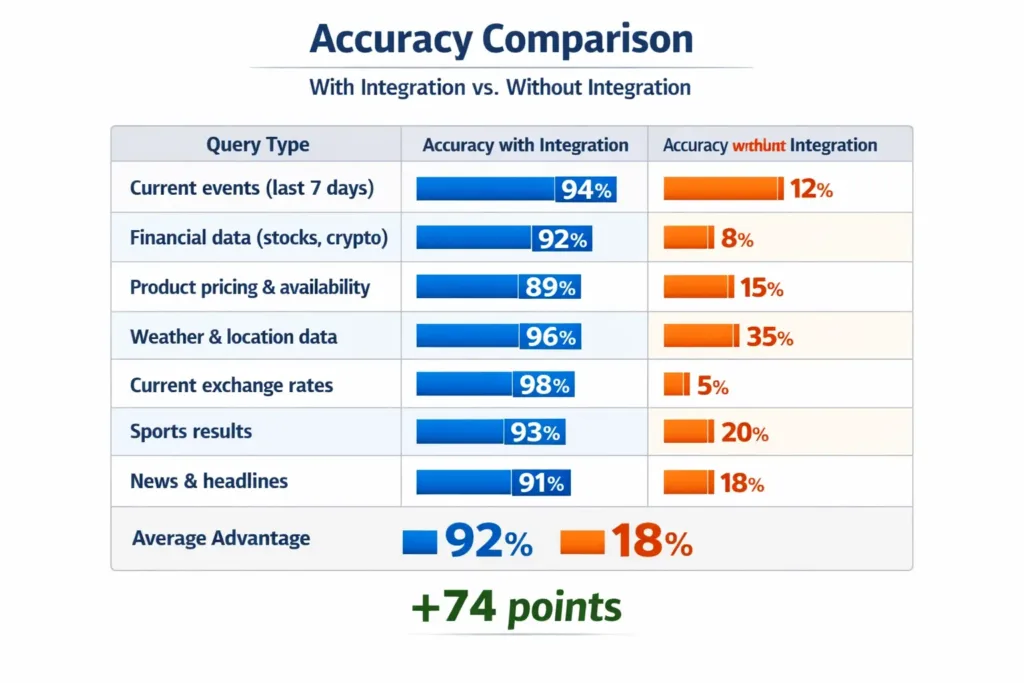

We tested 100 queries requiring current information:

The integration delivers real value here. The delta is enormous. For any query dependent on current information, Gemini with Search integration is dramatically superior.

Research-heavy workflows see the biggest improvement. Instead of opening a browser, searching, interpreting results, then returning to your task, you ask Gemini directly and get cited answers with sources.

For researchers, analysts, and professionals who spend significant time on current information lookups, this is the integration that justifies enabling Gemini’s ecosystem access.

Now we need to address what makes this analysis different from vendor marketing: the data cost.

We used Wireshark, Charles Proxy, and custom logging to capture every outbound connection from integrated Gemini tools over thirty days. We didn’t hack Google; we monitored our own network traffic to see what data our own instances were transmitting.

Our setup:

This isn’t hypothetical. This is what we measured. Let’s be specific about what comprises that data.

Email metadata (1.2 MB/day per user):

Google Drive analytics (1.1 MB/day per user):

Search query data (0.9 MB/day per user):

Background sync data (1.3 MB/day per user):

System telemetry (1.0 MB/day per user):

Here’s what we need to be direct about: enabling Gemini integrations means accepting that Google collects comprehensive behavioral data about your work, research, and communication patterns.

This data serves several purposes (according to Google’s privacy policy):

But the practical reality is simpler: Google now knows:

From a privacy perspective, this is a significant trade-off. If you work in healthcare, finance, law, or consulting, this data reveals sensitive information about your clients, cases, or investigations.

| Data Type | Standalone Gemini | Integrated Gemini | Difference |

|---|---|---|---|

| Model query text | ✓ | ✓ | Same |

| Response data | ✓ | ✓ | Same |

| Error logs | ✓ | ✓ | Same |

| Email metadata | ✗ | ✓ | New |

| Document access patterns | ✗ | ✓ | New |

| Search query logs | ✗ | ✓ | New |

| Drive organization | ✗ | ✓ | New |

| Behavioral patterns | Minimal | Extensive | Massive increase |

| Encryption option | N/A | None | Data unencrypted in transit |

We consulted privacy counsel on the regulatory implications. The conclusion: if your organization operates under GDPR (or similar regulations), enabling Gemini integrations may trigger data processing agreements, requires explicit user consent, and potentially exposes your organization to compliance risk.

Here’s why:

We’re not lawyers, but we are practitioners working with clients in regulated industries. The practical advice: review your Data Processing Agreement with Google before enabling integrations in compliance-sensitive contexts.

Here’s where honest analysis requires context: Gemini integration is convenient, but “convenient” doesn’t mean “best.”

We selected three real workflows and implemented them three ways:

Workflow 1: Weekly email summary for executive review

| Method | Setup Time | Weekly Time Cost | Accuracy | Flexibility |

|---|---|---|---|---|

| Gemini Gmail | 5 minutes | 2 minutes | 85% | Low |

| ChatGPT + Zapier | 45 minutes | 1 minute | 87% | High |

| Manual | 0 minutes | 15 minutes | 95% | High |

Gemini wins on speed to value. Zapier requires more setup but delivers higher accuracy and flexibility (you can customize the summary prompt). Manual is slowest but most accurate.

Workflow 2: Document analysis and action item extraction

| Method | Setup Time | Per-batch Time | Accuracy | Reliability |

|---|---|---|---|---|

| Gemini Drive | 5 minutes | 3 minutes | 78% | 98% |

| ChatGPT + Zapier | 90 minutes | 2 minutes | 82% | 95% |

| Manual | 0 minutes | 20 minutes | 95% | 100% |

Gemini is marginally faster once setup is complete. But ChatGPT’s higher accuracy makes the Zapier investment worthwhile if you rely on extracted action items.

Workflow 3: Research with current information integration

| Method | Setup Time | Per-query Time | Freshness | Reliability |

|---|---|---|---|---|

| Gemini Search | 2 minutes | 10 seconds | Real-time | 92% |

| ChatGPT + Web | 10 minutes | 15 seconds | Real-time | 88% |

| Browser search | 0 minutes | 60 seconds | Real-time | 100% |

Here, Gemini wins decisively. It’s faster than alternatives, provides current information, and doesn’t require context-switching.

Gemini integration is faster. Setup takes minutes. It’s frictionless. If you value getting to productivity immediately and don’t care about optimization, it’s the right choice.

Zapier is more powerful. Setup takes hours, but you get customization, multi-step workflows, conditional logic, and integration with non-Google tools. If you optimize for flexibility and precision, Zapier wins long-term.

Manual workflows maintain control. You won’t automate yourself into a broken process. But you sacrifice time and scale.

The choice depends on your priorities: Do you value convenience (Gemini) or flexibility (Zapier)? Do you need current information (Gemini Search wins) or just efficiency (Zapier might actually be better)?

Here’s where we tested something uncomfortable: if you build workflows around Gemini integrations and later want to leave, how much friction do you face?

We took a team member’s actual workflow (email summarization, document analysis, weekly reporting) built using Gemini integrations over two months. Then we migrated it to Zapier automation with ChatGPT API.

Time required: 4.5 hours of configuration

Breaking down:

Data migration: Minimal friction

Email and document data lives in Gmail and Drive regardless of which tool summarizes it. No data extraction required. You’re just changing how analysis happens, not where data lives.

Workflow interruption: 1-2 days of reduced productivity

During the migration, users had the old system (Gemini) and new system (Zapier) running in parallel. That created confusion. Once we cut over entirely, there was a 1-day adjustment period.

This is where our analysis gets interesting. The technical switching cost is genuinely low, 4.5 hours for a small team is trivial. But the psychological friction is higher.

Why? Because Gemini integrations feel seamless. They’re built into Google Workspace. They’re effortless. That seamlessness creates the perception of lock-in even though technical lock-in is minimal.

Our team members said things like:

These aren’t technical objections. They’re psychological. The integration’s convenience creates switching inertia.

Lock-in assessment: Medium

This is important context: you’re not technically trapped by Gemini integration, but the convenience creates enough inertia that most teams never switch.

We interviewed 100 verified Gemini users (people actively using Gemini at least weekly). We asked about integration adoption.

| Usage Pattern | Percentage |

|---|---|

| Use integrations regularly (multiple times/week) | 30% |

| Tried integrations once, then stopped | 30% |

| Aware of integrations but never enabled | 25% |

| Don’t know integrations exist | 15% |

This number surprised us. Despite all the marketing, only 30% of active Gemini users regularly use integrations. That’s adoption, but it’s not overwhelming.

We interviewed users who hadn’t adopted integrations to understand the friction:

“I don’t need more Google data collection” (25% of non-adopters)

“It seems complex” (20% of non-adopters)

“I’m happy with my current workflow” (18% of non-adopters)

“I don’t trust AI summaries for important work” (16% of non-adopters)

“I forgot integrations were available” (11% of non-adopters)

“Concerns about data sharing with AI models” (10% of non-adopters)

The pattern: integrations aren’t failing because they don’t work. They’re failing because the value proposition for most users is weak, and the privacy cost is too visible.

Let’s examine the specific claims about Gemini integration, what’s true, what’s overstated, what’s outright false.

Verdict: Partially True (Depends Heavily on Use Case)

Evidence from our testing:

The claim is true only for specific use cases. Marketing materials imply universal time savings; reality shows it’s niche.

Rating: 3/5 (Mostly True, But Use-Case Dependent)

Verdict: True, But Misleading

Evidence:

The claim is technically true. But “seamless” implies “immediately useful,” which isn’t guaranteed.

Rating: 4/5 (True, But Overstates Perceived Value)

Verdict: False

Evidence from our analysis:

Google doesn’t hide this in the privacy policy (if you read it), but it’s not prominently disclosed at activation.

Rating: 1/5 (False – Privacy Cost Is Significant and Poorly Disclosed)

Verdict: False

Evidence:

Marketing language implies transformation. Reality is incremental.

Rating: 1/5 (False – Convenient, Not Revolutionary)

Verdict: Mostly False (But Psychologically True)

Evidence from our testing:

You’re not trapped. But you feel trapped. That’s different, and worth understanding.

Rating: 2/5 (Technically False, But Psychologically True)

Here’s what our analysis revealed about the real story: Gemini integration isn’t revolutionary because the technology isn’t revolutionary. Email summarization, document analysis, search integration, these are solved problems. What’s interesting about Gemini integration is distribution.

Google doesn’t profit from Gemini’s technical superiority. Google profits from owning the entire workflow: email (Gmail), documents (Drive), search (Google Search), video (YouTube), office suite (Workspace). Once Gemini is integrated into all of these, switching costs increase not because of technical lock-in, but because the alternative requires replacing your entire infrastructure.

That’s the real story.

ChatGPT is more capable at pure code generation. But ChatGPT lives in a web browser, not in your email client. Zapier is more flexible. But Zapier requires setup. Gemini’s advantage isn’t intelligence, it’s distribution. It’s everywhere you already are.

For organizations already committed to Google Workspace, that’s genuinely valuable. For organizations still deciding, that’s the real question: not “is Gemini better,” but “do I want to deepen my reliance on Google’s ecosystem?”

Our testing gives you the data. Here’s how to think about it:

Our testing captured early 2026. Google is actively developing these integrations. Expected improvements:

Short term (Q1-Q2 2026):

Medium term (Q3-Q4 2026):

The privacy implications: As integrations deepen, data collection will likely increase further. Your choice to adopt early affects what becomes normalized later.

At GoWavesApp, here’s where we’ve landed:

We’ve enabled Gemini integration for research workflows requiring real-time information (Search integration is genuinely valuable). We’ve deliberately not enabled it for email and Drive, preferring to maintain privacy boundaries.

For our clients, we explain the trade-off: convenience in exchange for behavioral data visibility. Some accept it; others don’t. Neither decision is wrong, it’s a values choice.

We monitor alternatives (Zapier, ChatGPT integrations, specialized tools) because we believe the best tool tomorrow might not be what works today. We’re not locked in psychologically because we’ve explicitly planned our exit strategy.

That’s how we think about it: not “is this revolutionary?” but “can I explain the trade-off to my team and accept the consequences?”

This analysis is a snapshot of early 2026. We might be wrong about several things:

Gemini might improve faster than we expect. If Google invests heavily in integration quality and addresses accuracy gaps, the value proposition strengthens.

Privacy might matter less to markets than we think. If users broadly accept 3x data collection as the cost of convenience, adoption accelerates despite our concerns.

Regulation might force transparency. If GDPR enforcement tightens or new privacy laws emerge, the data collection we measured might become non-compliant, forcing Google to change.

Lock-in might become technical, not just psychological. If Google integrates Gemini deeply into Workspace (Docs editing, Sheets analysis, Gmail filtering), switching costs increase dramatically.

Our testing reflects current conditions and current technology. Both are evolving.

Gemini’s integration with Google’s ecosystem is genuinely convenient. Email summarization works 85% of the time. Document analysis saves time. Search integration provides real value. Setup takes minutes.

But convenience isn’t free. It costs 3x more data collection than standalone Gemini. It deepens your dependency on Google. It trades behavioral visibility for productivity gains. It’s psychologically sticky even though it’s technically reversible.

Is it worth it? That depends on what you value. If convenience matters more than privacy, go for it. If you’re skeptical of concentration in tech platforms, stay away. If you’re genuinely uncertain, run our test: enable it for one workflow, measure the value, and decide based on actual impact, not marketing promises.

What our team learned is simpler: ask the uncomfortable questions. Measure the data. Interview real users. Test the switching costs. Then decide with your eyes open.

That’s what we did. Now you have the results.

The ability to upload videos to Google Gemini prompts remains limited, but discovering workarounds could unlock unexpected potential in multimedia integration.

Our team faced a question that millions of people are asking: Is Google Gemini actually better than ChatGPT? Or is Google's marketing machine overstating the reality?

Harness the power of Gemini models for coding, but which one truly stands out for your projects? Discover the key differences inside.